Paperclips R Us

The rationalists have a thought prophecy to warn us about the dangers of strong AI:

It would innovate better and better techniques to maximize the number of paperclips. At some point, it might transform "first all of earth and then increasing portions of space into paperclip manufacturing facilities".

This may seem more like super-stupidity than super-intelligence. For humans, it would indeed be stupidity, as it would constitute failure to fulfill many of our important terminal values, such as life, love, and variety... The AI neither hates you, nor loves you, but you are made out of atoms that it can use for something else.

I believe we are misinterpreting the prophecy, and at great existential risk.

Intelligence is boring

When people think of AI, they think of models like GPT-3. Lots of people have been publishing very cool demos on what GPT-3 can do.

Of course, there's also discussion about how GPT-3 isn't smart at all. For example,

GPT-3 often performs like a clever student who hasn't done their reading trying to bullshit their way through an exam. Some well-known facts, some half-truths, and some straight lies, strung together in what first looks like a smooth narrative.

Allegedly a criticism of AI, I think it's actually an indictment of humans. Humans produce bullshit essays all the time. Many would argue you are currently reading one.

When we think of humanity, we like to imagine that we are a bunch of Einsteins unraveling the mysteries of the universe. But we are also the ones doing the genocides and the slavery. If you take the median human activity, it might very well be writing bullshit essays.

What humans create

As Exhibit A, I will present to you the top comment on a thread in one of thoe most popular subreddits on Reddit. The poster wants to know if they're an asshole for sending their kid to school in a mask.

Also, this thread was from 8 months ago.

[You're the asshole]. Because the mask doesn’t help protect your child. And you’re teaching him to be paranoid.

This comment has 4135 upvotes. Or another one:

Please show your proof of these "mixed responses". Because the wearing of masks is 100% proven to be ineffective and do nothing. It's unanimously agreed upon that the masks are useless in these situations.

941 upvotes.

8 months later, we see the world a little differently. (Or at least, some of us do.) But these responses are overwhelmingly the view of the thread. A thread that some 2500 people voted on, and nearly 500 people were motivated to write their similar opinion.

Now perhaps Reddit is a cesspooll, and I should have used equivalent bullshit on HN or bullshit studies in science journals or whatever social institution you think is excellent and not subject to fact-free human pontificating. The real fact is that we are not exactly Einsteins, as a civilization.

What we are instead

If you assume that each commenter spent 5 minutes writing their comment it works out to about 9 person-days. We managed to turn one person's probably-imaginary school story into 9 days of misinformation. That is an amazing feat of optimization.

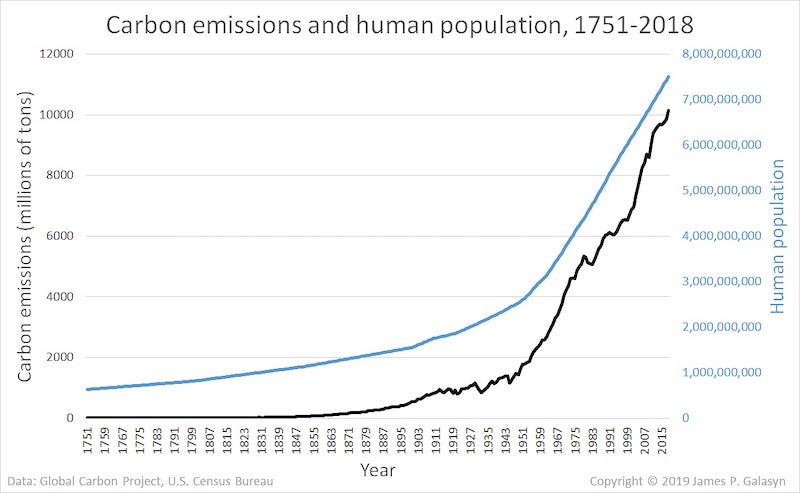

It turns out we are really good at optimization. I won't bore you with Moore's law, the obviously canonical optimization graph. Instead, here's carbon emissions per capita

Where I'm going with this

We do not have to wonder about what if we someday get an AI that optimizes production of paperclips at our expense. Human society is doing that now. Human society is both clever enough and stupid enough to be an effective paperclip maximizer. It also has the Climate Change Advantage™️ of being slow enough that we can't mount an effective attack.

Things that are nobody's job

Humans like to pin things on evil masterminds. Your problems are Senate Republicans, the previous administration, Mark Zuckerberg, fake news, etc. Certainly, I think some combination of those are evil.

But the paperclip maximizer is another kind of evil: that the quest for office supplies at the expense of the other goals. It is this form of evil that presently permeates our society at every level.

Consider COVID. Whose job is it to stop disease? A group of scientists with very little political power. On the other hand, it's someone's job to keep their bar open, another's job to drive the stock market, another's job to exploit fear to be re-elected, another's job to drive engagement on a website, your job to remain employed, and so on. The scientist doesn't have a chance against the interests of all these others. Besides, they are busy actually doing scientist stuff. They have no expertise in how to receive death threats or navigating an unstable president or all the stuff we've piled on top of the original problem.

With that example developed, here's a braindump of other things that are "allegedly" some kind of important problem, but we dedicate approximately zero resources to them relative to how much stuff we pile on top to make them worse:

- It isn't anyone's job to fight climate change, relative to how many people have jobs to perpetuate it

- It isn't anyone's job to heal racial division, relative to how many people have jobs to inflame it

- It isn't anyone's job to run america, relative to how many people run for office

- It isn't anyone's job to inform the public, relative to how many people have jobs to misinform

- It isn't anyone's job to connect people, relative to how many people have jobs to divide them

- It isn't anyone's job to end poverty, relative to how many people have jobs to perpetuate it

- It isn't anyone's job to create public discourse, relative to how many people have jobs to create engagement

- It isn't anyone's job to replace the healthcare system, relative to how many people have jobs in that system

- It isn't anyone's job to fund good startups, relative to how many people fund juicing machines

- It isn't anyone's job to pass legislation, relative to how many people are there to repeal it

- It isn't anyone's job to do research, relative to how many people are trying to get published

- It isn't anyone's job to make software low-latency, relative to how many people are employed to do Electron

- It isn't anyone's job to make people well-rounded people, relative to how many jobs sell coke or get people to binge-watch Netflix

- It isn't anyone's job to protect privacy, relative to how many people have jobs selling your data

- It isn't anyone's job to write open source, relative to how many people have jobs profiting from someone else's open source

- It isn't anyone's job to lower housing costs, relative to how many people are employed to increase them

- It isn't anyone's job to protect democracy, relative to how many people are employed to destroy it

- etc

I mean, just look at the Cabinet. There's no Secretary of Climate Change, there's no Race Minister, there's barely a Board of Election Fairness. There's just Department of Blowing Things Up and Office of Getting Re-Elected. From cycle to cycle there may also be Department of My Family and Grifting Chairperson. Your company has no Keystroke Latency Initiative, it only has Less Servers Initiative. Your city has no Commission on Housing Costs, it only has Commissions on Increasing Property Values.

To an extent completely unappreciated, human society actually depends on things nobody has any incentive to do. In less efficient times, this was less of an issue: people could afford to spend time on things that weren't, strictly speaking, measured. They could write news articles that weren't moneymakers, they could take time off campaigning in order to govern, they could close their bar to protect their community, they could educate students instead of doing test prep. When people talk about "how things used to be", there is a silent "before we optimized everything".

These days, fixing healthcare and having good public discourse are resources that could be used to make more paperclips. Paperclips are eating the world. To a large extent, the AI apocalypse is already here.

A microexample

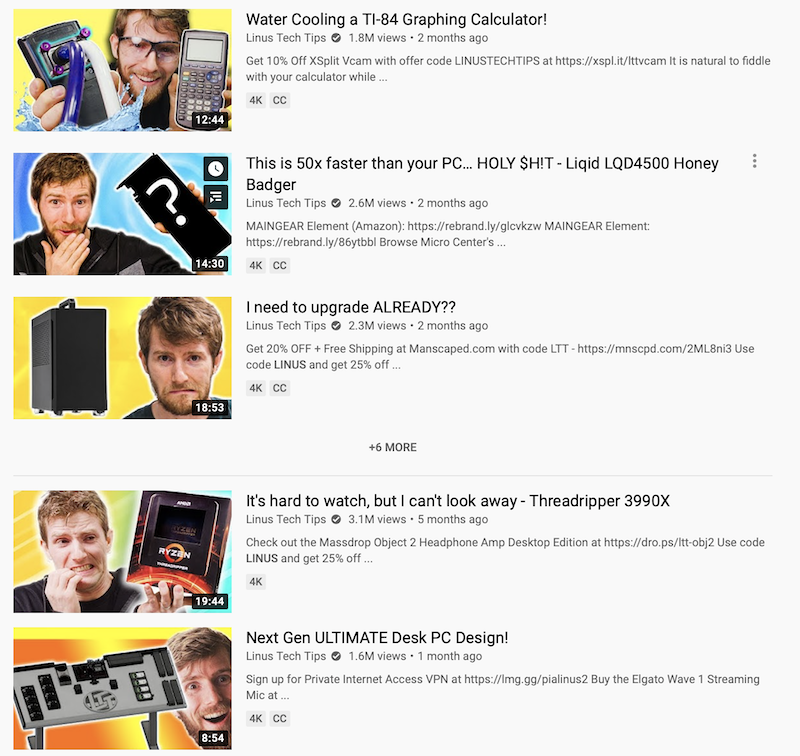

Here's one other example, just to get some air from the politics. One of my favorite YouTube channels is Linus Tech Tips. Over the years they've produced a lot of heavily-researched and quite frankly amazing videos on such topics as whether your PC fans should blow out or in, whether you can game at 16k resolution on high-end hardware, quasi-scientific experiments on whether fps improves competitive gaming, and so on. Basically, the kind of creative and delightful indie content that you would imagine to be free of corporate paperclip pushing.

Meanwhile, here are some thumbnails from their videos:

Evidently, it's somebody's job to get Linus to make a ridiculous face and make it the thumbnail for every video along with a RIDICULOUS headline DESTROYING his enemies. Because humans are emotionally-driven and this sort of thing drives engagement. If videos I worked hard on to write, shoot, and edit were packaged like this I'd be embarrassed. Then again, if videos had a more "sensible" thumbnail I might never have watched one.

If the paperclip maximizer is turning even high-quality independent creators into paperclip pushers, the world is getting very dark indeed.

Avoiding business as usual

It is in this context that I am quite annoyed at the push to be "apolitical" or "end the culture war", that people should just do their jobs, as if by ignoring the problems of society they will go away.

Don't get me wrong, I am annoyed at the culture war as anybody. But the thing is, "doing our jobs" is the whole problem. We wrote the software that relentlessly optimized human society without thinking too much about what it ought to be optimized for. So we ended up optimizing paperclips, at the expense of every other human value.

"The culture war" is in fact the process of deciding what our values are, and therefore what our jobs should even be. The impulse to ignore it is I think quite dangerous.