I have discovered that there are two mental models for computer programs.

- Software takes one kind of data and turns it into another kind of data. Data is king. I will call this the “black box” mental model. This is the model used by most computer scientists, programmers, and advanced users.

- Software is pixels on the screen. A program is entirely defined by a screenshot. Pixels are king. You click buttons and stuff happens on your monitor. Never mind the underlying data model. This is the mental model used by most computer users. I call this the “pixels” mental model.

The thing about a mental model is that it is automatic. You do not think about it, you think with it. Your expectations are completely defined by your mental model for what software is.

Users believe the pixels are the software. When I show somebody the mockups for an iPhone app, they ask how many minutes it will take to code it up. To them, it’s already built! The hard part, the pixels, are already done. What’s left is invisible, intangible, nothingness, the code, the backend. Far too many of them, at their own peril, never actually test the real thing–if it looks good, it must work! The whole world is only the visible. They cannot see invisible things.

When you look at a building, you look at the paint. The finish on the cabinets. The wallpaper. The color. You don’t think about the tensile strength of steel, unless you’re a builder. This is how users think about software–by its gloss.

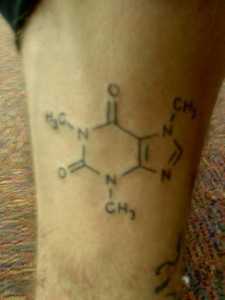

The thing about the “black box” model is it requires you to visualize. When I write code, I have a debugger running in my head that considers particular value for variables. When I write graph traversal algorith, I see Prim’s in my head, just like this guy. That’s a big part of what makes me effective as a developer: I can visualize invisible things. Compilers, debuggers, machine code, software architectures, object graphs, register allocation, memory maps, and dozens of other things all fly before me as I type out code.

I see stuff like this as I code.

But the danger of getting good at this is you come to believe a lie: you come to believe that pixels don’t matter. But they do. And not just to inexperienced users. They matter to you.

I’ve been on a quest to digitize everything. I’ve scanned all my books. Almost every piece of paper I interact with gets dumped into Evernote, from receipts to insurance offers. I have hundreds of gigabytes of greppable, high-quality information at my fingertips even without the Internet. If I want to look up my insurance policy from my phone, well, it’s easy. In theory.

In practice, Evernote is basically unusable on an aging iPhone 3GS. It takes something like five full minutes to call up that insurance policy document. Can’t do that in a checkout line. Their full-text search desperately needs an optimization overhaul. There is basically no application or service that exists that will let me reliably search 20k PDFs from a mobile device. So although in theory I have all this greppable data available in the cloud on all these various services, in practice I am no better off than keeping it in a pile of boxes at home. I suppose I could probably roll my own SQLite database and carry around a few hundred gigs of data on a hard disk all the time, but nobody’s going to do that, not even me. It’s absurd.

Consider the book scanning. Doing full-text search across my entire dead-tree library on a quad-core machine rocks. It is absolutely the best way to consult nearly the entire corpus of my knowledge; it’s an incredible experience when it comes time to do some research or write a blog post. But as far as actually reading books, not so much. Digital books are just now starting to catch up in the readability department. The pro is that my iPad is fantastic for reading in poor lighting conditions. The con is that it stutters through pages, and it makes reading large files super annoying. And I was scanning books long before iPads when readability was close to zero. In other words, scanning my books was a great idea, but in precisely those cases in which the physical book was a bad user interface. Technology is still playing catch-up to dead trees for actual reading, and will continue to be playing catch-up for a while.

As programmers, we are often on a quest to parse datasets. Let’s look for trends in the HN comments or track price changes on Amazon! Let’s argue about JSON vs YAML for parseability! Let’s download everything from the Gutenberg project, and start tracking our gas mileage to optimize our driving or predict traffic on our way to work or whatever. The problem is that data is not the problem, very much, anymore. We have enough graph traversals, we have enough algorithms, we have enough datasets, we have enough programming languages, we have enough frameworks, ORM layers, databases, development processes, build servers, message passing libraries, RPC methods, design patterns, and development methodologies. Relative to all of this, user interfaces are in the stone age. Wait, that’s unfair to the stone age. We had tools in the stone age. Just in the last couple of years, we have started to think about touching things in computing. You know, the sort of manipulation that jellyfish do. This revolutionary idea occurred to the industry in about 2008. Apple has risen to power as the world’s largest company, basically, by writing software for jellyfish.

This guy absolutely destroys the current state of UI/UX design. It is unbelievably bad. The usability of sending an e-mail from your device-du-jour is orders of magnitude worse than opening a jar of jam. Using a computer to interact with a system is about as hard as trying to interact with the world as a quadriplegic. Your interface to the outside is severely limited. The ordinary processes you use, like tactile feedback and spatial organization, are completely unavailable to you. You might as well be communicating via eyeball movement. It’s that bad.

This is why I get frustrated with people who get mad at all the “Yet Another Twitter Client” projects. As if a Twitter client is just a bit of glue around an API. (To be fair, many are.) It doesn’t have to be. It’s a user interface to talk to your friends. Building a scaleable message passing architecture is a solvable problem. Improving upon face-to-face communication may not be. Twitter clients are in fact in the category of the hardest type of software that you can write.

Let me give you a brief reality check. People are all up in arms these days because software is too cheap. It is starting to become price-competitive with coffee and candy, and we are frustrated when users don’t pay the cost of a single candybar to download our app.

Well guess what? Millions of years of evolutionary biology have programmed you to buy candy or drink coffee. When you have a latte, neurochemical stuff happens: you release endorphins, stuff crosses the blood-brain barrier, ATP receptors go crazy, it’s pretty much dopamine in a cup. Not to mention it is physically addictive, almost as much so as heroin, nicotine, or cocaine. Under the right circumstances people kill each other over this stuff. How is some CRM system you’ve written going to compete with that? What commit are you going to make today that people are going to fight a war over? Food interfaces with your brain. Software interfaces with a few square inches on some hard, lifeless surface. Your software competing with a candy bar? Please. A candy bar would have to be hundreds of times the price of your app before it was even a fair fight. Chocolate gives you dopamine. Software gives you pretty lights on a screen. Not even close.

Our user interfaces are in the amoeba stage. The iPad isn’t a game-changing device because of its user interface. It’s a game-changing device in spite of its user interface. An iPad can beat a computer at some things computers do. But if there is a pencil-and-paper equivalent that is even remotely viable, you are going to be hard-pressed to beat it with an iPad on the UX front. If you’ve taken notes in a math class on an iPad, raise your hand. Or worked an engineering problem. Or used it as scratch space to debug a programming problem. Or used it as a whiteboard. Pretty much what I use mine for is things I used to do with a computer–browse the web, watch video, write a quick e-mail, depending on my frustration level, try and read a PDF. It’s an incremental improvement mostly for things computers already do. But for the things that paper and pencil are good at, it is usually not the right tool. (Not without aeons of thought from a UX person, anyway.) There are entire classes of problems that cannot be solved effectively with computers yet, because we’re very much in the dark ages of UX.

But as developers we don’t want to hear that. We have spent decades learning how to interact with the world through the window of a 17″ monitor. Perhaps 1% of the world’s population have developed this arbitrary and uniquely worthless skill. We want to believe, purely because it is hard, that it is good. There are startups and large companies alike full of brilliant engineers that expend millions of hours of effort and dollars and write all this code and fail to produce as much value of a single cup of coffee. We actively celebrate how much money we spend to produce these totally worthless systems, issuing press releases about how many millions of capital we’ve raised for some technology that arranges the lights on the screen in a slightly more appealing way. It is a parody of progress, on par with any Onion article, as if we derive obscene pleasure in the sheer waste of human effort.

This is all very interesting. But it is a part of an important cognitive bias: when you have a hammer, you see the whole world as a nail. As developers, we start with “What software should we write?”, not what problems we should solve. We start with a platform, a language, a tool. When it’s time to market our product, we again look within the four corners of our 17″ monitor to SEO, CPC, and blogging, but little communication. When it comes time to understand our customer, our rectangular window to the world shows us charts, analytics, behaviors, and data, but no real observation of our customers in the field. Meanwhile, a bunch of MBAs who aren’t handcuffed to the keyboard make a killing.

This is why Steve Blank has to tell people “Get out of the building.” The sort of people who build software for a living (that is, me) are incredibly bright engineers who are good at using awful, terrible tools. You wouldn’t build a house out of cardboard, would you? Don’t do sales sitting at a keyboard. You wouldn’t try and understand your customer base from a telescope on the moon, would you? Don’t try and figure it out with an analytics dashboard. Just showing up and talking to people has so much more bandwidth than the fiber they run to your office.

As developers we often, rightly, criticize our users for believing that the pixels are the software. But for many programmers, myself included, the whole world is pixels. If it isn’t observable on a computer monitor somewhere in the world, it doesn’t happen. Truth is a record in a database. We use words like “canonical reality” to talk about source control and database synchronization. We’ve put the “multiverse” in our package manager. We spend decades of our lives turning this invisible set of things into that invisible set of things. Now honestly, who has the wackier mental model?

Want me to build your app / consult for your company / speak at your event? Good news! I'm an iOS developer for hire.

Like this post? Contribute to the coffee fund so I can write more like it.

Comments

Comments are closed.

Tags

Tags

I’ve often had conversations with my brother-n-law who is a trained and practicing electrician. These conversations usually start with me saying, after the apocalypse, you at least have a skill that could be useful to the human race. Mine, not so much – I’m a software developer. I think he may think I’m trying to find a round-about way to belittle his chosen profession but really, I value his contribution to society much more than I value mine. We, software developers, are the equivalent of ghosts – it’s impossible for software developers have a _direct_ and lasting impact on the physical world.

That’s the interesting thing about where our society seems to be headed – it can be wiped out so much more easily than a society based on something rooted completely in the physical world. Yeah, it’s cool to be able to make money building the next new actuarial program but where will you be when no-one’s building the electrical infrastructure that enables you to do that job or when someone else takes that infrastructure away… food for thought.

New reader here. I really like your work, thanks for writing.

Fantastic food for thought! I really like the way you approach the issue of usability. Insightful and something to be thought about.